- Home

- »

- Next Generation Technologies

- »

-

AI Inference Market Size And Trends, Industry Report, 2030GVR Report cover

![AI Inference Market Size, Share & Trends Report]()

AI Inference Market (And Segment Forecasts 2025 - 2030) Size, Share & Trends Analysis Report By Memory (HBM, DDR), By Compute, By Application (Generative AI, Machine Learning, Natural Language Processing (NLP), Computer Vision), By End-use, By Region

- Report ID: GVR-4-68040-596-6

- Number of Report Pages: 200

- Format: PDF

- Historical Range: 2018 - 2023

- Forecast Period: 2025 - 2030

- Industry: Technology

- Report Summary

- Table of Contents

- Interactive Charts

- Methodology

- Download FREE Sample

-

Download Sample Report

AI Inference Market Summary

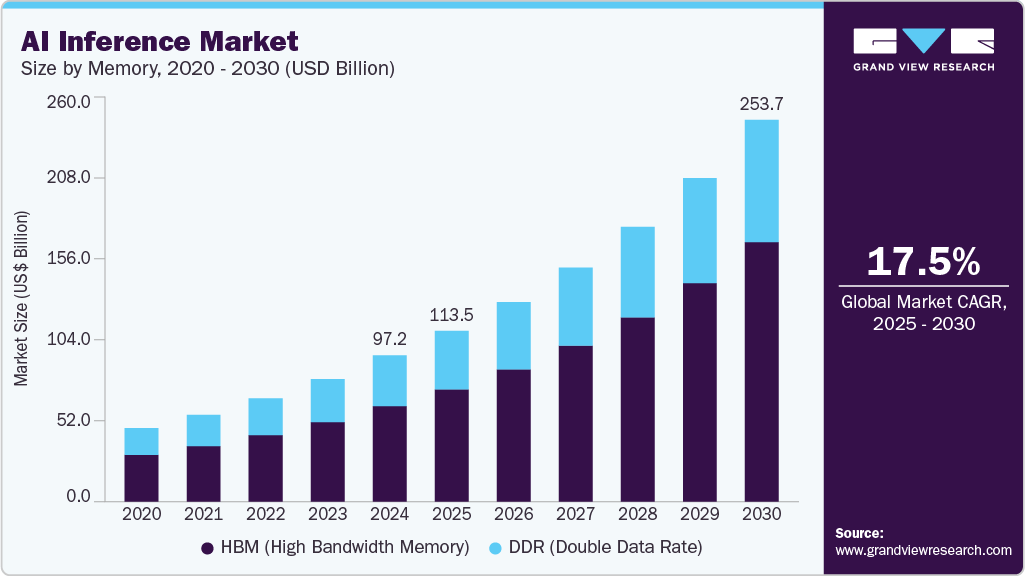

The global AI inference market size was estimated at USD 97.24 billion in 2024 and is projected to reach USD 253.75 billion by 2030, growing at a CAGR of 17.5% from 2025 to 2030. The demand for integrated AI infrastructure continues to grow as organizations focus on faster and more efficient AI inference deployment.

Market Size & Trends:

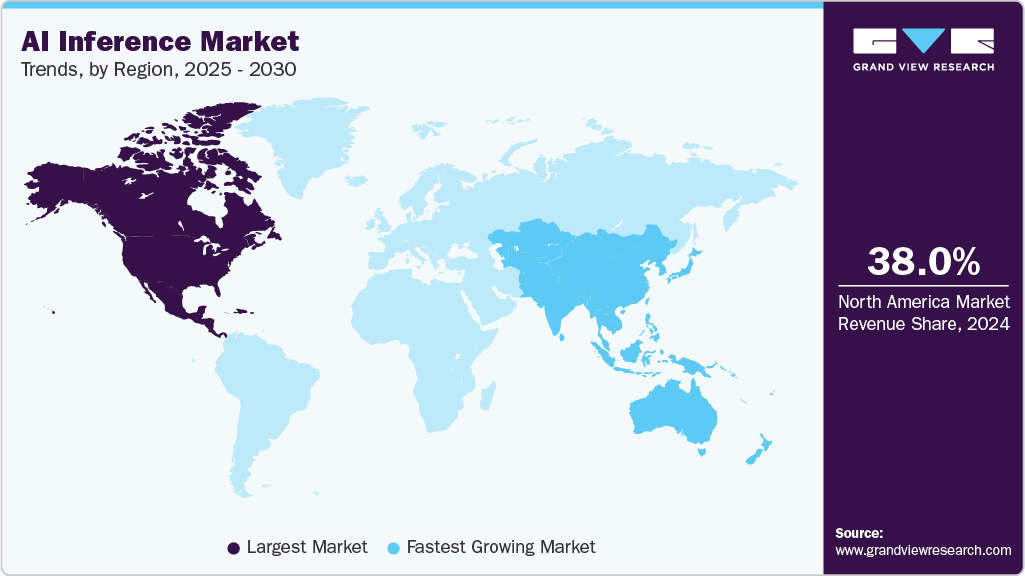

- North America AI inference market leads the global industry with a revenue share of 38.0% in 2024.

- The AI inference market in the U.S. is expected to register significant CAGR over the forecast period.

- By memory, the HBM (High Bandwidth Memory) segment hold the largest revenue share of 65.3% in 2024.

- By compute, the GPU segment accounted for the largest revenue share of 52.1% in 2024.

- By application, the machine learning models segment held the largest share of 36.0% in 2024.

Key Market Statistics:

- 2024 Market Size: $97.24 Billion

- 2030 Estimated Market Size: $253.75 Billion

- CAGR: 17.5% (2025-2030)

- North America: Largest market in 2024

- Asia Pacific: Fastest growing region

Enterprises prioritize platforms that unify computing power, storage, and software to streamline AI workflows. This integration simplifies management while boosting scalability and inference speed. Reducing setup time and operational complexity is key for handling real-time AI workloads. Privacy and security remain critical factors driving infrastructure choices. These trends are pushing broader adoption of comprehensive AI inference solutions.

There is a growing emphasis on supporting many AI models to address various business requirements. For instance, in March 2025, Oracle Corporation and NVIDIA Corporation announced a collaboration to integrate NVIDIA’s AI software and hardware with Oracle Cloud Infrastructure, enabling faster deployment of agentic AI applications. The collaboration offers over 160 AI tools, NIM microservices, and no-code blueprints for scalable enterprise AI solutions.

Enterprises require flexibility to deploy models suited to their specific tasks. Supporting a broad range of AI accelerators helps optimize performance across diverse hardware. This variety ensures compatibility with existing infrastructure and future technology. It enables organizations to choose the best tools for their unique AI workloads. This approach drives innovation by allowing seamless integration of new AI technologies as they emerge. For instance, in May 2024, Red Hat, a U.S.-based software company, launched the AI Inference Server, an enterprise solution designed to run generative AI models efficiently across hybrid cloud environments using any accelerator. This platform aims to deliver high-performance, scalable, and cost-effective AI inference with broad hardware and cloud compatibility.

The AI inference market is experiencing rapid growth driven by a strong need for real-time AI processing across many industries. Businesses are increasingly relying on AI to analyze data quickly and make instant decisions, which improves operational efficiency and customer experiences. Industries such as autonomous vehicles, healthcare, retail, and manufacturing are demanding faster, more accurate AI inference to support applications such as object detection, diagnostics, personalized recommendations, and automation. This rising demand pushes companies to develop and adopt more advanced inference technologies that deliver high performance with low latency. Moreover, the expansion of connected devices and the Internet of Things (IoT) fuels the requirement for immediate AI insights at the edge. As a result, investments in specialized AI chips and optimized software frameworks have surged. The market is expected to maintain its strong growth trajectory as AI inference becomes critical in digital transformation strategies worldwide.

Memory Insights

The HBM (High Bandwidth Memory) segment dominates the AI inference market with a revenue share of 65.3% in 2024. HBM offers faster data transfer speeds compared to traditional memory types. This speed is crucial for efficiently handling large AI workloads. As a result, many AI systems prefer HBM to meet high-performance demands. HBM’s architecture allows for greater bandwidth and lower power consumption, making it ideal for AI inference tasks. Its ability to handle massive data throughput supports complex neural network computations. Companies developing AI hardware are increasingly integrating HBM to boost overall system performance. This trend is such asly to continue as AI applications require faster and more efficient memory solutions.

Double Data Rate (DDR) memory offers a balance of speed, capacity, and cost-effectiveness. Its ability to deliver faster data transfer rates helps AI systems access and process large amounts of information quickly. Compared to more specialized memory types, DDR is more affordable and easier to integrate into a wide range of devices and infrastructures. This makes it widely used in data centers, edge computing, and enterprise AI applications. As AI models grow in size and complexity, the need for efficient and reliable memory solutions such as DDR increases. Its broad compatibility allows organizations to enhance AI performance without excessive expenses. DDR memory supports AI inference by enabling quicker and more efficient data handling.

Compute Insights

The GPU segment has accounted for the largest revenue in 2024. Graphics Processing Units (GPUs) have long dominated the AI inference market due to their superior parallel processing capabilities. Their high computational throughput makes them ideal for running complex deep learning models. GPUs benefit from a mature software ecosystem, including frameworks such as TensorFlow and PyTorch. They are widely used in cloud and data center environments for tasks such as computer vision and natural language processing. Major cloud providers continue to deploy large-scale GPU infrastructure to meet growing AI inference demands. Despite emerging alternatives, GPUs remain the standard for high-performance inference applications.

Neural Processing Units (NPUs) are rapidly gaining attention in the AI inference market due to their efficiency and task-specific design. Unsuch as general-purpose GPUs, NPUs are built specifically for matrix and tensor operations used in AI workloads. This specialization allows them to deliver low-latency, low-power inference, especially critical for edge and mobile devices. As AI models are increasingly deployed on smartphones, wearables, and vehicles, NPUs offer an attractive alternative. Leading chipmakers are now integrating NPUs into SoCs to support local inference. Their adoption is accelerating as industries seek faster, more power-efficient AI processing closer to the source of data.

Application Insights

Machine learning (ML) models have dominated the AI inference market. These models, including decision trees, support vector machines, and shallow neural networks, are computationally lighter and easier to deploy. ML-based inference is widely used in fraud detection, recommendation systems, and predictive maintenance. Their relatively low latency and resource efficiency make them ideal for real-time applications. Enterprises continue to rely on ML for its proven accuracy and scalability across structured data tasks. Despite evolving trends, machine learning remains the foundation for many industrial AI inference deployments.

Generative AI is rapidly transforming the AI inference sector with models capable of producing text, images, and audio. Technologies such as large language models (LLMs) and diffusion models are driving demand for more powerful inference hardware. These models require higher computational resources, often involving billions of parameters. As a result, inference is shifting toward optimized accelerators that can handle generative tasks efficiently. Use cases are expanding across content creation, virtual assistants, code generation, and design automation. The rise of generative AI is reshaping infrastructure needs and creating new growth areas within the inference market.

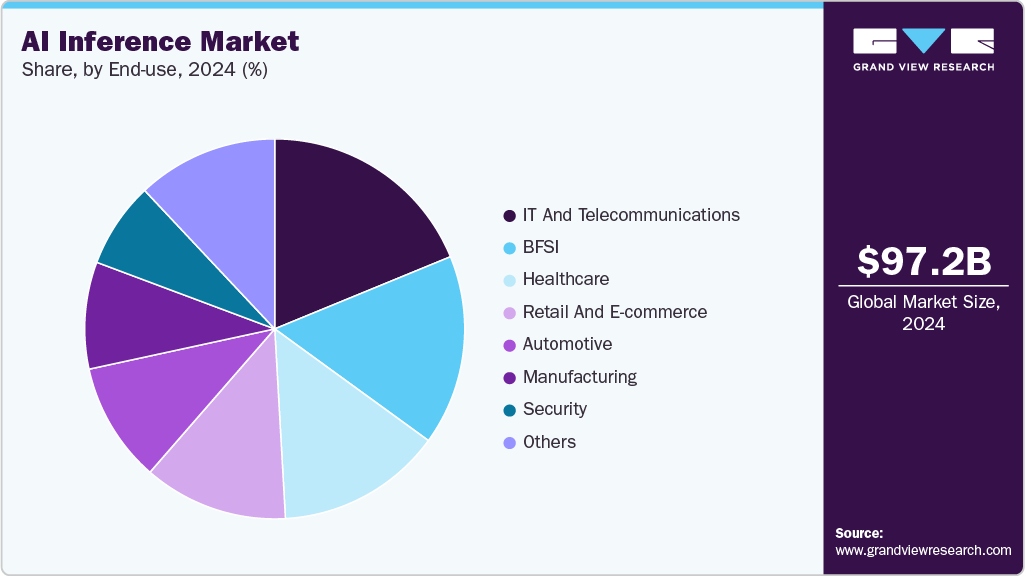

End-use Insights

The IT and telecommunications segment held the largest market revenue share in 2024. The IT and telecommunications sector has dominated the AI inference market due to its early adoption of data-driven technologies. AI is widely deployed in network optimization, predictive maintenance, and customer support automation. Telecom companies use AI inference to manage massive data flows and enhance service reliability. Cloud providers and IT firms rely on AI to streamline operations and deliver intelligent software services. Their robust infrastructure supports large-scale deployment of inference models across various applications. This established presence continues to anchor the sector’s leading position in the market.

The healthcare industry is rapidly adopting AI inference to improve diagnostics, treatment planning, and operational efficiency. AI integration is driving applications such as medical imaging analysis, patient monitoring, and drug discovery. Inference models help detect scan anomalies, predict patient outcomes, and personalize care plans. Growing investments in digital health infrastructure support wider adoption of AI tools across hospitals and labs. Regulatory advancements and increased trust in AI-powered solutions are further accelerating uptake. As a result, healthcare is becoming one of the fastest-growing sectors in the AI inference market.

Regional Insights

North America AI inference market dominated the global market with a share of 38.0% in 2024. The region maintains a dominant share in the AI inference market due to early adoption and enterprise maturity. The region benefits from widespread AI integration in the IT, telecom, and automotive industries. Strong venture funding and university-industry collaborations drive ongoing innovation. Cloud-based inference platforms are widely deployed across both public and private sectors. Demand for real-time and edge inference solutions continues to rise.

U.S. AI Inference Market Trends

The U.S. leads the AI inference market with advanced infrastructure and a strong base of cloud service providers. Major tech companies continue to invest heavily in AI hardware and software optimization. Use cases span across healthcare, finance, manufacturing, and autonomous systems. Government support for AI innovation also reinforces market expansion. The presence of specialized chip manufacturers further strengthens its position.

Europe AI Inference Market Trends

Europe’s AI inference market is growing steadily, supported by digital transformation and policy-driven AI development. Countries such as Germany, France, and the UK are advancing AI use in manufacturing, transportation, and energy. Regulatory focus on data privacy and responsible AI encourages trustworthy model deployment. Public-private partnerships are accelerating AI applications in healthcare and public services. Investments in AI chips and edge computing are increasing across the region.

Asia Pacific AI Inference Market Trends

Asia Pacific is the fastest-growing region in the AI inference market, led by China, Japan, and South Korea. The rise of smart cities, 5G infrastructure, and AI-integrated devices is fueling demand. Local tech companies are embedding inference capabilities into mobile phones, EVs, and industrial systems. Government initiatives are actively supporting AI R&D and semiconductor development. The region is also seeing strong momentum in edge AI applications.

Key AI Inference Market Company Insights

Some of the key companies in the AI Inference market include Google LLC, Intel Corporation, Microsoft, Mythic, NVIDIA Corporation, and others. Organizations are focusing on increasing customer base to gain a competitive edge in the industry. Therefore, key players are taking several strategic initiatives, such as mergers and acquisitions, and partnerships with other major companies.

-

Amazon Web Services, Inc. has developed the Inferentia2 chip to enhance deep learning inference performance. This chip delivers up to 4 times higher throughput and up to 10 times lower latency compared to its predecessor, Inferentia. It supports large models, including large language models and vision transformers, making it suitable for generative AI applications. The Inferentia2 chip is integrated into EC2 Inf2 instances, which offer ultra-high-speed connectivity between chips, enabling distributed inference at scale.

-

Google LLC's Ironwood is the seventh-generation Tensor Processing Unit (TPU), specifically designed for AI inference tasks. It is Google's most powerful and energy-efficient TPU to date, built to handle complex models such as large language models and mixture of experts. Ironwood scales up to 9,216 chips, offering 42.5 exaflops of compute power, surpassing the performance of the world's largest supercomputer. The chip features enhanced SparseCore, increased high-bandwidth memory capacity, and improved Inter-Chip Interconnect (ICI) networking.

Key AI Inference Companies:

The following are the leading companies in the AI inference market. These companies collectively hold the largest market share and dictate industry trends.

- Amazon Web Services, Inc.

- Arm Limited

- Advanced Micro Devices, Inc.

- Google LLC

- Intel Corporation

- Microsoft

- Mythic

- NVIDIA Corporation

- Qualcomm Technologies, Inc.

- Sophos Ltd

Recent Developments

-

In February 2025, HTEC, a software development company, has partnered with d-Matrix, an AI inference hardware startup, to support the development of d-Matrix's Corsair AI computing platform. This partnership will leverage HTEC's expertise in AI and embedded software to enhance d-Matrix's efficient in-memory compute solutions for AI workloads in data centers.

-

In October 2024, OpenAI, a U.S.-based artificial intelligence company, collaborated with Broadcom and is consulting with Taiwan Semiconductor Manufacturing Company Limited, a semiconductor manufacturing company, to develop a specialized AI inference chip, moving away from a primary focus on GPUs for training. This initiative aims to produce custom silicon optimized for running AI models and responding to user requests, a less expensive and quicker path than establishing its chip manufacturing.

AI Inference Market Report Scope

Report Attribute

Details

Market size value in 2025

USD 113.47 billion

Revenue forecast in 2030

USD 253.75 billion

Growth rate

CAGR of 17.5% from 2025 to 2030

Base year for estimation

2024

Historical data

2018 - 2023

Forecast period

2025 - 2030

Quantitative units

Revenue in USD million/billion, and CAGR from 2025 to 2030

Report coverage

Revenue forecast, company ranking, competitive landscape, growth factors, and trends

Segment scope

Memory, compute, application, end-use, region

Region scope

North America; Europe; Asia Pacific; Latin America; Middle East & Africa

Country scope

U.S.; Canada; Mexico; Germany; UK; France; China; Japan; India; Australia; South Korea; Brazil; KSA; UAE; South Africa

Key companies profiled

Amazon Web Services, Inc.; Arm Limited; Advanced Micro Devices, Inc.; Google LLC; Intel Corporation; Microsoft; Mythic; NVIDIA Corporation; Qualcomm Technologies, Inc.; SambaNova Systems, Inc.

Customization scope

Free report customization (equivalent up to 8 analysts’ working days) with purchase. Addition or alteration to country, regional & segment scope

Pricing and purchase options

Avail customized purchase options to meet your exact research needs. Explore purchase options

Global AI Inference Market Report Segmentation

This report forecasts revenue growth at global, regional, and country levels and provides an analysis of the latest industry trends and opportunities in each of the sub-segments from 2018 to 2030. For this study, Grand View Research has segmented the global AI Inference market report in terms of memory, compute, application, end-use, and region.

-

Memory Outlook (Revenue, USD Billion, 2018 - 2030)

-

HBM (High Bandwidth Memory)

-

DDR (Double Data Rate)

-

-

Compute Outlook (Revenue, USD Billion, 2018 - 2030)

-

GPU

-

CPU

-

FPGA

-

NPU

-

Others

-

-

Application Outlook (Revenue, USD Billion, 2018 - 2030)

-

Generative AI

-

Machine Learning

-

Natural Language Processing (NLP)

-

Computer Vision

-

Others

-

-

End Use Outlook (Revenue, USD Billion, 2018 - 2030)

-

BFSI

-

Healthcare

-

Retail and E-commerce

-

Automotive

-

IT and Telecommunications

-

Manufacturing

-

Security

-

Others

-

-

Regional Outlook (Revenue, USD Billion, 2018 - 2030)

-

North America

-

U.S.

-

Canada

-

Mexico

-

-

Europe

-

UK

-

Germany

-

France

-

-

Asia Pacific

-

China

-

Japan

-

India

-

Australia

-

South Korea

-

-

Latin America

-

Brazil

-

-

Middle East & Africa (MEA)

-

KSA

-

UAE

-

South Africa

-

-

Frequently Asked Questions About This Report

b. The global AI Inference market size was estimated at USD 97.24 billion in 2024 and is expected to reach USD 113.47 billion in 2025.

b. The global AI Inference market is expected to grow at a compound annual growth rate of 17.5% from 2025 to 2030 to reach USD 253.75 billion by 2030.

b. North America dominated the AI Inference market with a share of 38.0% in 2024. This is attributable to the presence of advanced AI infrastructure, strong investment in AI research and development, and widespread adoption of AI applications across key industries such as healthcare, automotive, and finance.

b. Some key players operating in the AI Inference market include Amazon Web Services, Inc., Arm Limited, Advanced Micro Devices, Inc., Google LLC, Intel Corporation, Microsoft, Mythic, NVIDIA Corporation, Qualcomm Technologies, Inc., SambaNova Systems, Inc.

b. Key factors that are driving the market growth include rising AI adoption, demand for edge inference, hardware advancements, and growing investments in data centers.

Share this report with your colleague or friend.

Need a Tailored Report?

Customize this report to your needs — add regions, segments, or data points, with 20% free customization.

ISO 9001:2015 & 27001:2022 Certified

We are GDPR and CCPA compliant! Your transaction & personal information is safe and secure. For more details, please read our privacy policy.

Trusted market insights - try a free sample

See how our reports are structured and why industry leaders rely on Grand View Research. Get a free sample or ask us to tailor this report to your needs.