Multimodal AI Market Size, Share & Trends Analysis Report By Component (Software, Service), By Data Modality (Text Data, Speech & Voice Data), By End Use (Media And Entertainment, BFSI), By Enterprise Size, By Region, And Segment Forecasts, 2025 - 2030

- Report ID: GVR-4-68040-187-5

- Number of Report Pages: 100

- Format: PDF

- Historical Range: 2017 - 2024

- Forecast Period: 2025 - 2030

- Industry: Technology

Multimodal AI Market Size & Trends

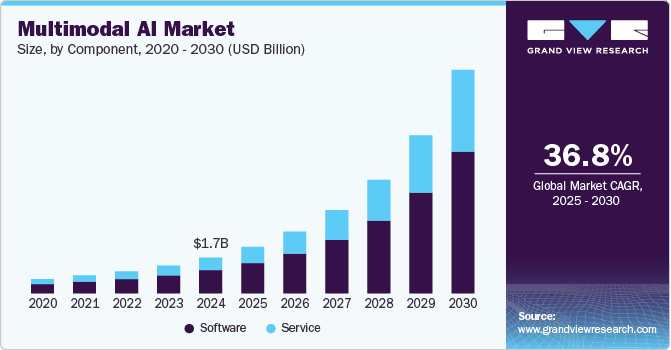

The global multimodal AI market size was estimated at USD 1.74 billion in 2024 and is projected to grow at a CAGR of 36.8% from 2025 to 2030. Multimodal AI uses diverse data types, including video, audio, speech, images, text, and traditional numerical datasets, to improve its capability to make precise predictions, derive insightful conclusions, and offer accurate solutions to real-world issues. This strategy entails training AI systems to concurrently synthesize and process various data sources, allowing them to understand both content and context better. With the increasing adoption of multimodal AI across diverse sectors, stakeholders are presented with a significant opportunity to capitalize on the expanding market. By providing innovative multimodal AI solutions tailored to meet the specific needs of various industries, stakeholders can play an important role in driving growth within the multimodal AI industry.

With continuous advancements in AI technologies, there is an increasing awareness that multimodal AI can be tailored to meet specific needs and challenges of various industries. Whether in healthcare, education, finance, or entertainment, each sector contains unique data characteristics and specific demands. Multimodal AI is strategically positioned to deliver personalized solutions by leveraging the capabilities of multiple data modalities. For instance, Globant's Advanced Video Search (AVS) utilizes Google Cloud's Gemini models to help users search video content using text or image-based queries. AVS employs multimodal search functionality, enabling the location of specific clips, images, and moments within extensive video libraries using text or image-based queries.

Moreover, multimodal AI is utilized in developing advanced driver-assistance systems within the automotive industry. This involves integrating visual data from cameras, textual data from sensors, and audio data from in-car voice assistants to improve road safety and enhance the driving experience. For instance, in 2024, Volkswagen of America integrated a virtual assistant into the myVW app, enabling drivers to access owner's manuals and ask questions. With Gemini's multimodal capabilities, users can point their smartphone cameras at the dashboard to receive useful information about the indicator lights. This sector-specific approach is paving the way for a new generation of innovation, where each industry's distinctive challenges and opportunities are met with customized multimodal AI solutions.

Component Insights

The software segment led the market and accounted for a 65.0% global revenue share in 2024. Multimodal AI software constitutes integrated systems created to concurrently handle and process various types of data, encompassing images, text, audio, and video. These software solutions commonly integrate advanced technologies such as machine learning, deep learning, and natural language processing to facilitate a comprehensive understanding of multimodal information. In practical terms, multimodal AI software empowers users to create, implement, and oversee AI models with the ability to manage diverse data modalities cohesively.

The service segment is expected to register the fastest CAGR of 37.9% during the forecast period. Multimodal AI services encompass a broad spectrum of offerings tailored to diverse professional and managed services requirements. Professional services involve consulting and providing strategic guidance for implementing multimodal AI solutions and specialized training and workshops to equip teams with essential skills. Multimodal data integration services facilitate the seamless blend of various data types. In managed services, comprehensive solutions are delivered, managing the entire lifecycle of multimodal AI systems. This includes continuous improvement, infrastructure management, and ensuring optimal performance, enabling organizations to harness the advantages of multimodal.

Data Modality Insights

The text data segment accounted for the largest revenue share in 2024. Text data, being a fundamental component of communication and information exchange, is prevalent in various sectors, such as customer service, NLP, and content analysis. The ability of multimodal AI to effectively analyze and comprehend text data has made it a key solution for tasks such as chatbots, sentiment analysis, and document processing, driving its prominence and contributing significantly to the overall revenue within the multimodal AI industry.

The speech & voice data segment is projected to grow at the highest CAGR over the forecast period. The widespread adoption of voice-enabled devices, virtual assistants, and voice-activated applications across various industries has fueled the importance of speech and voice data. For instance, in 2024, GoTo, an Indonesian digital ecosystem, introduced “Dira by GoTo AI,” a fintech voice assistant in Indonesia, to simplify tasks within its GoPay application.

Dira allows users to navigate the GoPay app and execute functions such as money transfers and bill payments via voice commands. In addition, advancements in speech recognition technology, improved language processing algorithms, and the rising popularity of voice-driven commands in smart devices have contributed to the segment's dominance. The seamless integration of speech and voice data in multimodal AI applications has further solidified its position as a key market driver.

End Use Insights

The media & entertainment segment accounted for the largest revenue share in 2024, owing to the industry's increasing focus on enhancing user experiences, content personalization, and creative innovation. Multimodal AI technologies are particularly well-suited for applications within media and entertainment, where the combination of text, image, audio, and video data is crucial for delivering immersive and engaging content.

The BFSI segment is expected to register the fastest CAGR during the forecast period. Multimodal AI is employed for secure and user-friendly customer authentication, especially facial recognition. This technology strengthens security protocols in mobile apps, online banking, and ATM transactions. In the BFSI sector, chatbots and virtual assistants leverage multimodal AI to comprehend and address customer queries effectively. This involves handling text-based queries, interpreting images of documents, and incorporating voice commands to ensure a smooth customer service experience. For instance, an AI-driven system can evaluate a loan applicant's credit score while analyzing social media activities to gauge financial stability. JP Morgan’s DocLLM exemplifies this approach by integrating text data, metadata, and contextual information from financial documents, facilitating automatic document processing and risk assessment.

Enterprise Size Insights

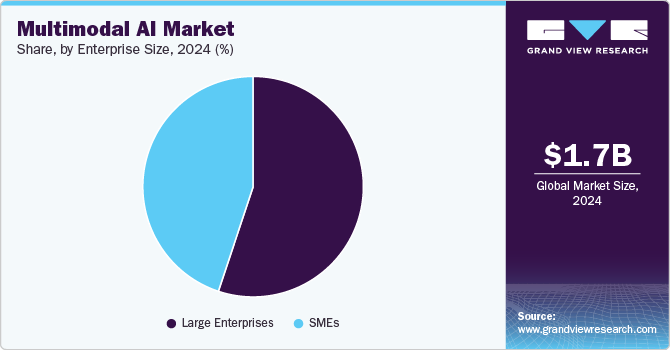

The large enterprise segment accounted for the largest revenue share in 2024. Large enterprises generally deal with diverse data types, including text, images, videos, and audio. Multimodal AI assists in addressing the complexity of these organizations' operations by providing comprehensive solutions that can analyze and interpret various modalities. In addition, multimodal AI platforms often offer customization options, allowing large enterprises to tailor the technology to their specific requirements. This level of customization is essential for addressing the varied and intricate processes within large organizations.

The SMEs segment is projected to grow at the highest CAGR during the forecast period. Multimodal AI solutions tailored for SMEs offer cost-effective options, making these advanced technologies more accessible to smaller businesses with limited budgets. Multimodal AI platforms customized for SMEs are more adaptable to smaller-scale workflows, offering solutions that are suited to specific operations and requirements of SMEs.

Regional Insights

North America multimodal AI market dominated the market and accounted for a 48.0% share in 2024, fueled by the convergence of technologies and a rising demand for more sophisticated and human-like interactions between machines and users. A key driving force is the widespread adoption of smartphones and smart devices, coupled with the increasing availability of high-quality data. The region's emphasis on innovation creates an environment conducive to the progress of multimodal AI. North American companies are pioneering the development and implementation of multimodal AI solutions, reflecting the region's dedication to advancing technology and pushing the boundaries of AI to enhance user engagement and problem-solving.

U.S. Multimodal AI Market Trends

The multimodal AI market in the U.S. dominated the regional market in 2024 due to its position as a leader in AI innovation. This dominance stems from the presence of major technology corporations, a thriving startup ecosystem, and substantial government funding for AI initiatives. The country's strong emphasis on research and development and access to a skilled workforce accelerate the development and deployment of multimodal AI solutions. Furthermore, the widespread adoption of AI across various industries, including healthcare, retail, and manufacturing, contributes to the growth of the multimodal AI industry.

Europe Multimodal AI Market Trends

Europe multimodal AI market is expected to grow at a significant CAGR during the forecast period, fueled by increasing investments in AI research and development, particularly in countries such as Germany, France, and the UK. The rising adoption of multimodal AI in key sectors such as healthcare, automotive, and manufacturing drives expansion within multimodal AI industry. Moreover, supportive government policies and initiatives aimed at promoting AI innovation are expected to boost market growth further. Europe's focus on ethical AI development and data privacy also positions it as a leader in responsible AI deployment.

Asia Pacific Multimodal AI Market Trends

The multimodal AI market in Asia Pacific is anticipated to grow at the highest CAGR during the forecast period. One significant factor is the rapid adoption and integration of advanced technologies across various regional industries. Countries in the Asia Pacific, such as China, Japan, South Korea, and India, have witnessed substantial growth in their economies, leading to increased investments in AI. The region's large and diverse consumer base and the proliferation of smartphones and other smart devices have driven the demand for multimodal AI applications in areas such as e-commerce, healthcare, and finance. In addition, the growing focus on digital transformation initiatives by businesses and governments has further accelerated the deployment of multimodal AI solutions in the Asia Pacific region.

China multimodal AI market dominated the regional market in 2024. The country's dominance is fueled by massive investments in AI research and development, driven by the government and private sectors. For instance, in 2025, the Bank of China, a state-owned entity, declared its intention to allocate a minimum of USD 136 million over the subsequent 5 years to support companies operating within the AI sector. This financial backing is intended to bolster the AI industry's infrastructure, promote technological innovation, and facilitate the integration of AI across various sectors. Furthermore, the government's strong support for AI innovation and a rapidly growing technology sector accelerate market growth. The widespread adoption of AI across various industries, including e-commerce, finance, and transportation, also contributes to the market's dominance.

Key Multimodal AI Company Insights

Some key players operating in the market include Google LLC; Microsoft; and Amazon Web Services, Inc.

-

Google LLC has been a major player in advancing multimodal AI technologies, leveraging machine learning, deep learning, and natural language processing. The company's contributions to the field include the development of state-of-the-art models for image and speech recognition, language translation, and understanding complex data modalities.

-

Microsoft is a multinational technology company renowned for its software products, operating systems, and cloud computing services. Microsoft's Azure cloud platform provides a suite of AI services, including computer vision, speech recognition, and natural language processing. These services empower developers to build multimodal AI applications.

Clarifai, Inc., and SenseTime are some emerging market participants in the multimodal AI market.

-

Clarifai, Inc. is a prominent player in the multimodal AI industry, focusing on visual recognition and analysis. The company offers a comprehensive platform that harnesses the power of multimodal AI to interpret and analyze visual data, including images and videos.

-

SenseTime is renowned for its advancements in AI and computer vision technologies. The company specializes in a diverse range of AI applications, with a notable emphasis on facial recognition, image and video analysis, and solutions for autonomous driving.

Key Multimodal AI Companies:

The following are the leading companies in the multimodal AI market. These companies collectively hold the largest market share and dictate industry trends.

- Aimesoft

- Amazon Web Services, Inc.

- Google LLC

- IBM Corporation

- Jina AI GmbH

- Meta.

- Microsoft

- OpenAI, L.L.C.

- Twelve Labs Inc.

- Uniphore Technologies Inc.

Recent Developments

-

In February 2025, Google launched Gemini 2.0 Pro Experimental, its latest flagship AI model, alongside other AI updates, and began rolling out the Gemini 2.0 Flash Thinking model in the Gemini app to broaden the accessibility of its advanced AI reasoning capabilities.

-

In October 2024, India launched BharatGen, its first government-funded Multimodal Large Language Model (MLLM) initiative, designed to improve public service delivery and citizen engagement. Led by IIT Bombay under the National Mission on Interdisciplinary Cyber-Physical Systems (NM-ICPS), BharatGen aims to develop generative AI systems capable of producing high-quality multimodal content and text in various Indian languages.

-

In December 2023, Alphabet Inc., an American multinational technology conglomerate holding company, unveiled the initial phase of its advanced AI model, Gemini. This groundbreaking model represents the first instance of surpassing human experts in performance on Massive Multitask Language Understanding (MMLU), a widely recognized benchmark for evaluating the capabilities of language models.

-

In December 2023, Meta revealed its plan to introduce multimodal AI functionalities that provide information about the surroundings collected through the cameras and microphones of the company's smart glasses. By saying “Hey Meta” while wearing the Ray-Ban smart glasses, users can activate a virtual assistant capable of both seeing and hearing the events in their immediate environment.

-

In October 2023, Reka AI, Inc. unveiled Yasa-1, a groundbreaking multimodal AI assistant designed to extend its understanding beyond text to include images, short videos, and audio snippets. Yasa-1 offers enterprises the flexibility to tailor their capabilities to private datasets of various modalities, enabling innovative experiences for diverse use cases. With support for 20 languages, the assistant boasts the capacity to deliver contextually informed answers sourced from the internet, handle extensive contextual documents, and even execute code.

Multimodal AI Market Report Scope

|

Report Attribute |

Details |

|

Market size value in 2025 |

USD 2.27 billion |

|

Revenue forecast in 2030 |

USD 10.89 billion |

|

Growth rate |

CAGR of 36.8% from 2025 to 2030 |

|

Actual data |

2017 - 2024 |

|

Forecast period |

2025 - 2030 |

|

Quantitative units |

Revenue in USD million/billion and CAGR from 2025 to 2030 |

|

Report coverage |

Revenue forecast, company ranking, competitive landscape, growth factors, and trends |

|

Segments covered |

Component, data modality, end use, enterprise size, region |

|

Regional scope |

North America; Europe; Asia Pacific; Latin America; MEA |

|

Country scope |

U.S.; Canada; Germany; UK; France; China; Japan; India; South Korea; Australia; Brazil; Mexico; KSA; UAE; South Africa |

|

Key companies profiled |

Aimesoft; Amazon Web Services, Inc.; Google LLC; IBM Corporation; Jina AI GmbH; Meta.; Microsoft; OpenAI, L.L.C.; Twelve Labs Inc.; and Uniphore Technologies Inc. |

|

Customization scope |

Free report customization (equivalent up to 8 analysts working days) with purchase. Addition or alteration to country, regional & segment scope. |

|

Pricing and purchase options |

Avail customized purchase options to meet your exact research needs. Explore purchase options |

Global Multimodal AI Market Report Segmentation

This report forecasts revenue growth at global, regional, and country levels and provides an analysis of the latest industry trends in each of the sub-segments from 2017 to 2030. For this study, Grand View Research has segmented the global multimodal AI market report based on component, data modality, end-use, enterprise size, and region.

-

Component Outlook (Revenue, USD Million, 2017 - 2030)

-

Software

-

Service

-

-

Data Modality Outlook (Revenue, USD Million, 2017 - 2030)

-

Image Data

-

Text Data

-

Speech & Voice Data

-

Video & Audio Data

-

-

End-Use Outlook (Revenue, USD Million, 2017 - 2030)

-

Media & Entertainment

-

BFSI

-

IT & Telecommunication

-

Healthcare

-

Automotive & Transportation

-

Gaming

-

Others

-

-

Enterprise Size Outlook (Revenue, USD Million, 2017 - 2030)

-

Large Enterprise

-

SMEs

-

-

Regional Outlook (Revenue, USD MBillion, 2017 - 2030)

-

North America

-

U.S.

-

Canada

-

-

Europe

-

Germany

-

UK

-

France

-

-

Asia Pacific

-

China

-

Japan

-

India

-

South Korea

-

Australia

-

-

Latin America

-

Brazil

-

Mexico

-

-

Middle East and Africa (MEA)

-

KSA

-

UAE

-

South Africa

-

-

Frequently Asked Questions About This Report

b. The global multimodal AI market size was estimated at USD 1.74 billion in 2024 and is expected to reach USD 2.27 billion in 2025.

b. The global multimodal AI market is expected to grow at a compound annual growth rate of 36.8% from 2025 to 2030 to reach USD 10.89 billion by 2030.

b. North America dominated the multimodal AI market with a share of 48.0 % in 2024 fueled by the convergence of technologies and a rising demand for more sophisticated and human-like interactions between machines and users.

b. Some key players operating in the multimodal AI market include Aimesoft; Amazon Web Services, Inc.; Google LLC; IBM Corporation; Jina AI GmbH; Meta.; Microsoft; OpenAI, L.L.C.; Twelve Labs Inc.; and Uniphore Technologies Inc.

b. Key factors that are driving the multimodal AI market growth include the increasing need for more immersive and context-aware user experiences in applications such as virtual assistants, customer service, and content recommendation, and growing integration of multimodal AI in industry-specific applications, such as healthcare diagnostics, autonomous vehicles, and security surveillance.

We are committed towards customer satisfaction, and quality service.

"The quality of research they have done for us has been excellent."